Best Stalk Determination

We've now produced a number of 3D grasping points for each frame, so we need to decide which stalk is best

to grasp:

Based on the manipulator dimensions and shape, stalks which are not graspable—those where another stalk is

blocking the predicted path the manipulator would take to insert the sensor, or where the manipulator

would not be able to reach—are eliminated. Stalks with unrealistic positions, caused by sporadic

segmentation output, misalignment between the depth map and image, or mis-measurements in the depth map,

are eliminated as well. The best stalk is then determined from among those remaining based on a heuristic

weighting function using the stalk's height and width, the confidence of the segmentation model's

prediction, and the distance of the stalk from the optimal grasping position for the arm. This weighting

function favors stalks which are more easily graspable and which have a higher chance for successful

insertion; the precise weighting of these factors could be adjusted for a different task based on the most

favorable conditions for the manipulator.

Consensus Among Frames

This process is repeated for a number of frames, after which the best stalks from all frames are

clustered and the largest cluster is taken to be the consensus stalk. A representative stalk from this

cluster is then chosen, and the grasping point for this stalk is determined to be the final grasping

point.

If no valid stalks are detected among any frame—which may occur if there are no stalks in view, or if

the stalks are too difficult to process—a reposition response is given, indicating to the robot

that the current viewpoint is insufficient to estimate a reasonable insertion pose.

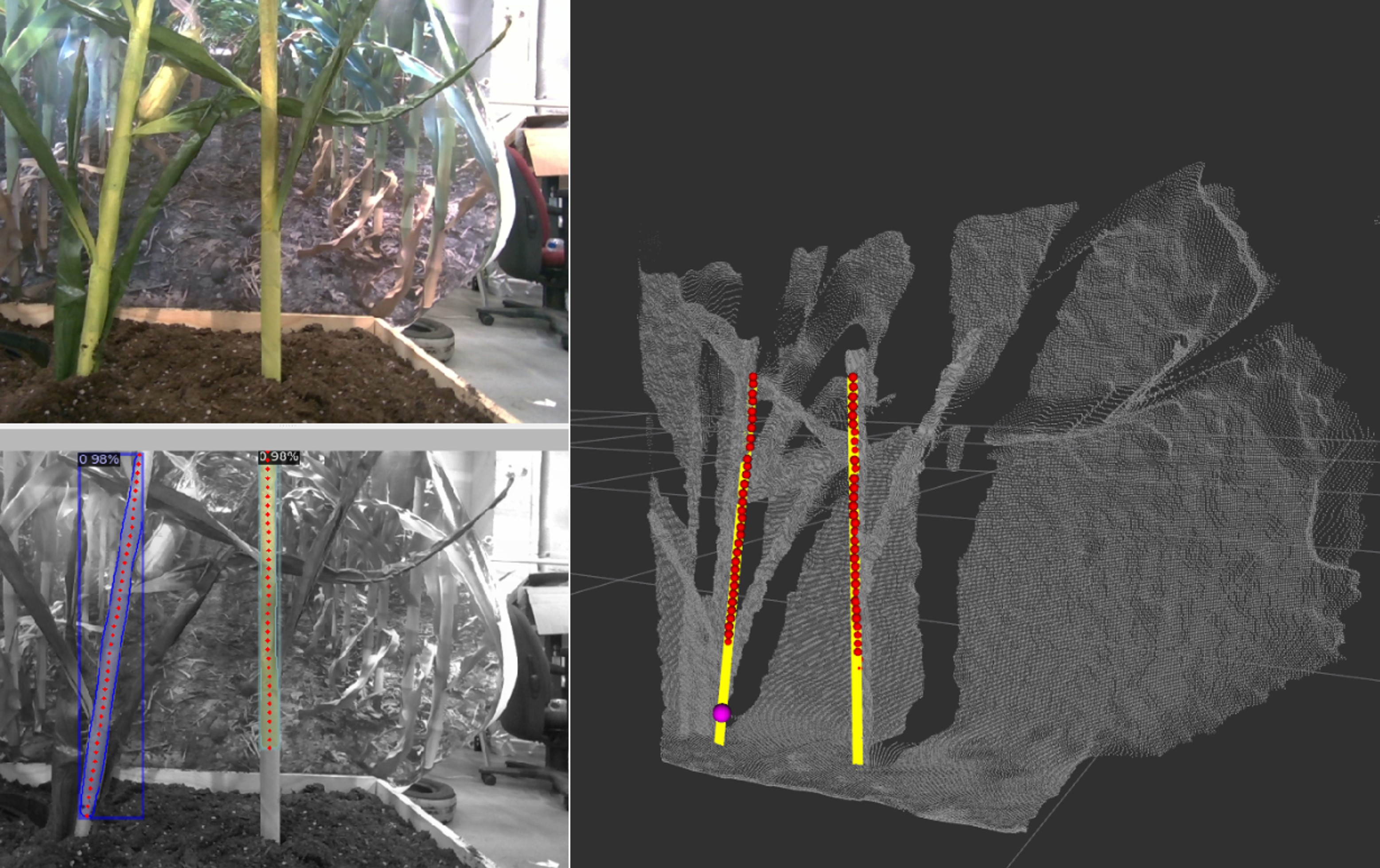

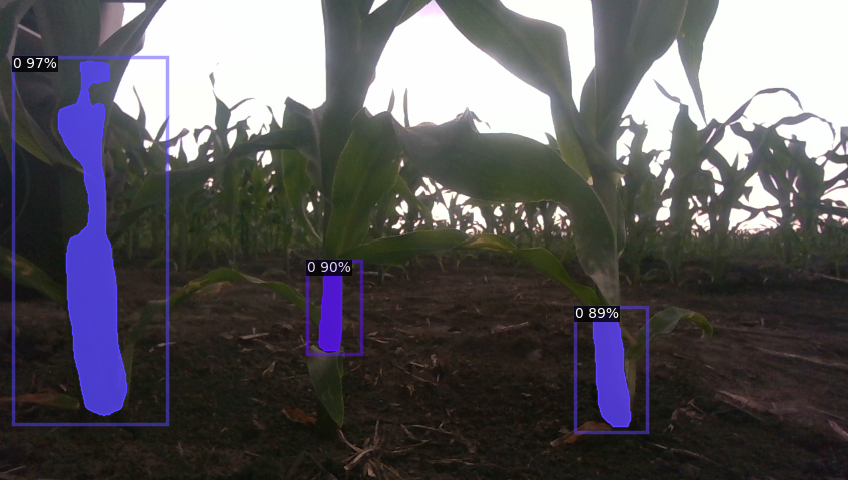

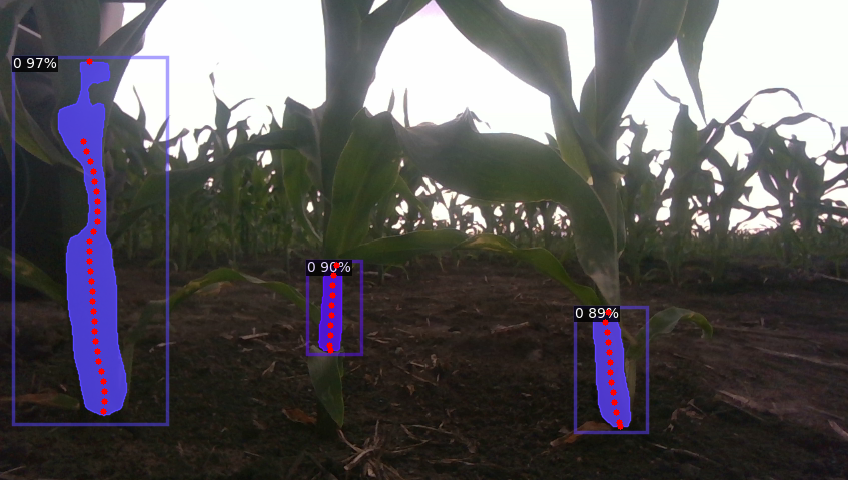

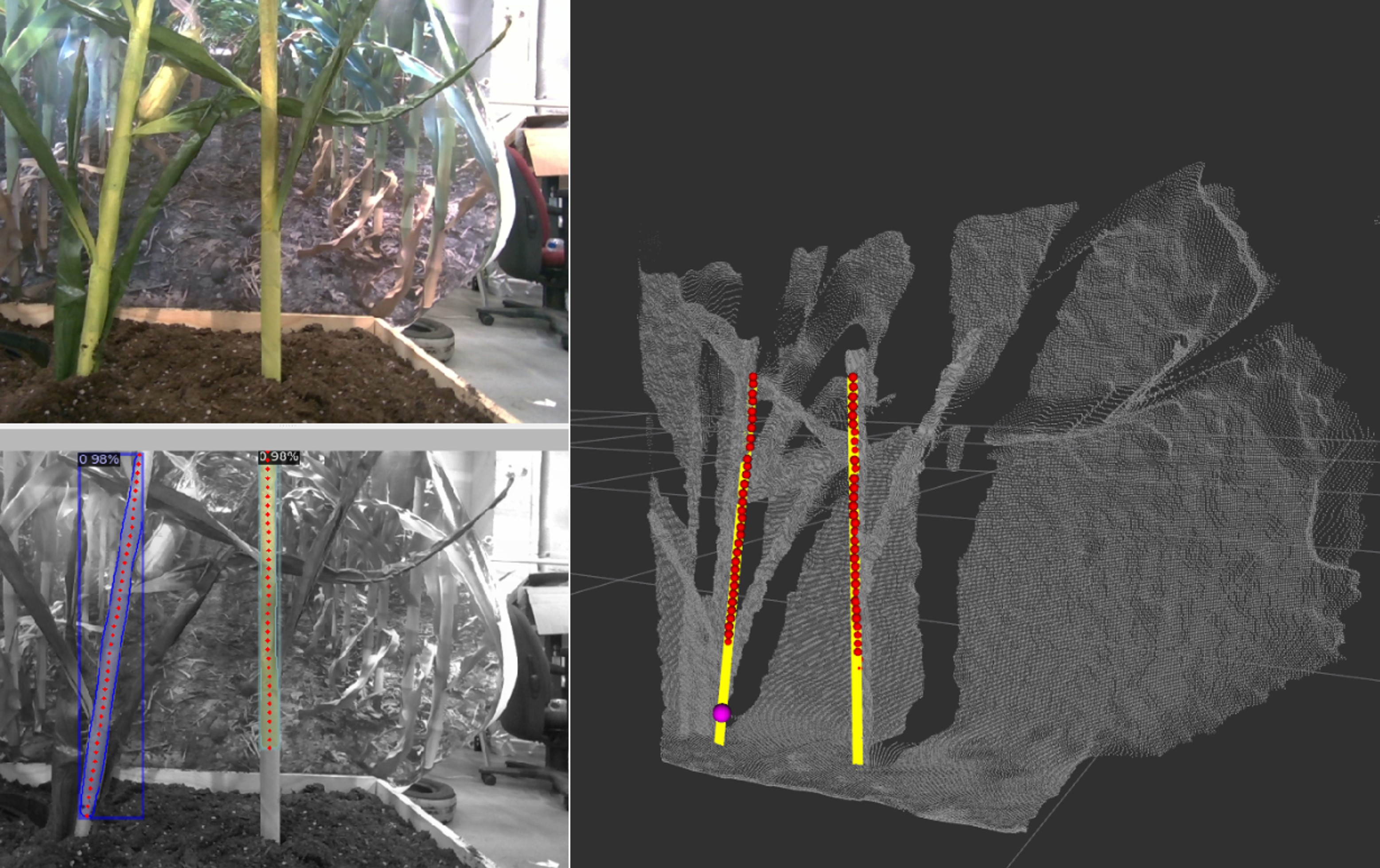

Qualitative Evaluation with Mock Setup

With synthetic and real corn grown with the indoor greenhouse, we tested the detection pipeline.